About Tonative

Data Curation for Under-Resourced Languages

Who We Are

Tonative is a community-driven initiative focused on AI data curation for under-resourced languages, with a strong emphasis on African languages. We bring together native speakers, linguists, and technologists to build, validate, and extend high-quality language datasets that enable inclusive and culturally grounded AI systems.

Our work sits at the intersection of human expertise and machine intelligence, using human-AI collaboration to create datasets that are both scalable and linguistically accurate.

What We Do

Tonative focuses on the creation, extension, and validation of language data for AI, especially in contexts where data scarcity limits technological progress.

Community-driven data curation

We work directly with native speakers and language experts to curate, annotate, and validate text and language data.

Human-AI collaborative dataset extension

We use AI-assisted methods (such as machine translation or language models) combined with human review to efficiently grow high-quality datasets.

African and low-resource language datasets

We prioritize languages that are under-represented in existing AI systems, helping ensure these languages are included in the future of AI.

Open and reusable research outputs

Where possible, we publish datasets, tools, and methodological insights to support broader research and development.

Why We Exist

Most AI systems today are trained on data from a small number of dominant languages. This creates systemic exclusion for communities whose languages and cultural contexts are missing or misrepresented.

Our Focus Areas

Our work centers on several key areas that contribute to creating a more inclusive and ethically responsible AI ecosystem.

Commercial and Open-Source AI Data Curation for Under-Resourced Languages

We focus on the meticulous collection, preparation, and management of data for AI systems, specializing in languages that are often neglected by mainstream AI development. This includes creating datasets for both commercial applications and open-source initiatives.

Language Datasets for NLP and Machine Learning

A core part of our mission is building high-quality, relevant language datasets essential for training robust Natural Language Processing (NLP) models and other machine learning applications.

Annotation and Validation Workflows

We develop and implement rigorous workflows for annotating and validating data. This ensures the accuracy and reliability of the datasets, which is crucial for the performance of the AI models built upon them.

Ethical and Inclusive AI Data Practices

Ethics and inclusion are at the foundation of our work. We adhere to and promote practices that ensure AI data is collected and used responsibly, respects linguistic diversity, and minimizes bias against under-represented communities.

Translation Applications that Enable Easy Access to African Languages

We work on developing and improving translation technologies that make African languages more accessible, facilitating communication and access to information for native speakers and the global community.

Who We Work With

Native Speakers and Language Communities

Our strongest partnerships are with the native speakers and communities whose languages we work with. Their insights and expertise are invaluable to the quality and cultural relevance of our data.

Linguists and Language Technologists

We collaborate with experts in language structure, acquisition, and technology to ensure the scientific rigor and technical excellence of our datasets and applications.

Software Developers

Partnerships with software developers are key to building and deploying the tools, platforms, and applications necessary for data curation, model training, and end-user access.

Academic and Independent AI Researchers and Initiatives

We actively engage with researchers, both within academia and independent initiatives, to advance the state-of-the-art in AI for low-resource languages.

Organizations Interested in Inclusive and Responsible AI

We partner with different organizations, from businesses to non-profits; that are committed to integrating inclusive and responsible principles into their AI strategies and products.

How We Operate

We are a community-first organisation, which mostly comprises trained language speakers who are responsible for the annotation and validation of the datasets we publish. We are constantly open to having new members join us provided they are African speakers, with a fair understanding of English that serves as our common language, and is willing to go through our training program.

Speakers contribute to both open-source and free datasets, and payable commercial datasets. Aside language speakers, we have a rich team of AI researchers and software developers. The researchers contribute to identifying data gaps and how Tonative can come in, as well as carrying out useful experiments with the datasets to show scientific contribution, while the software developers contribute to building, deploying and maintaining software tools.

Commercial datasets are usually carried out when we get contracts from research organisations, or done ahead by us for research organisations to purchase.

Our Vision

We envision a future where African languages can meaningfully participate in AI systems, and its communities have agency over how their languages are represented in technology.

By combining community knowledge with AI tools, Tonative aims to make high-quality language data more accessible, ethical, and inclusive.

Meet Our Team

Sharon Ibejih

Founder

Cynthia Amol

Co-Founder & Head of Data

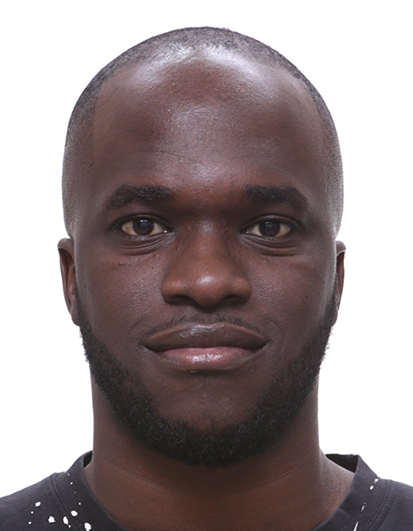

Alfred Kondoro

Head of Research

Damilare Keshinro

Head of AI Translations

Chinenye Anikwenze

Engineering Lead

Joy Naomi Olusanya

Training Manager